Project Information

- Category: Data Science

- Project Date: March, 2024

-

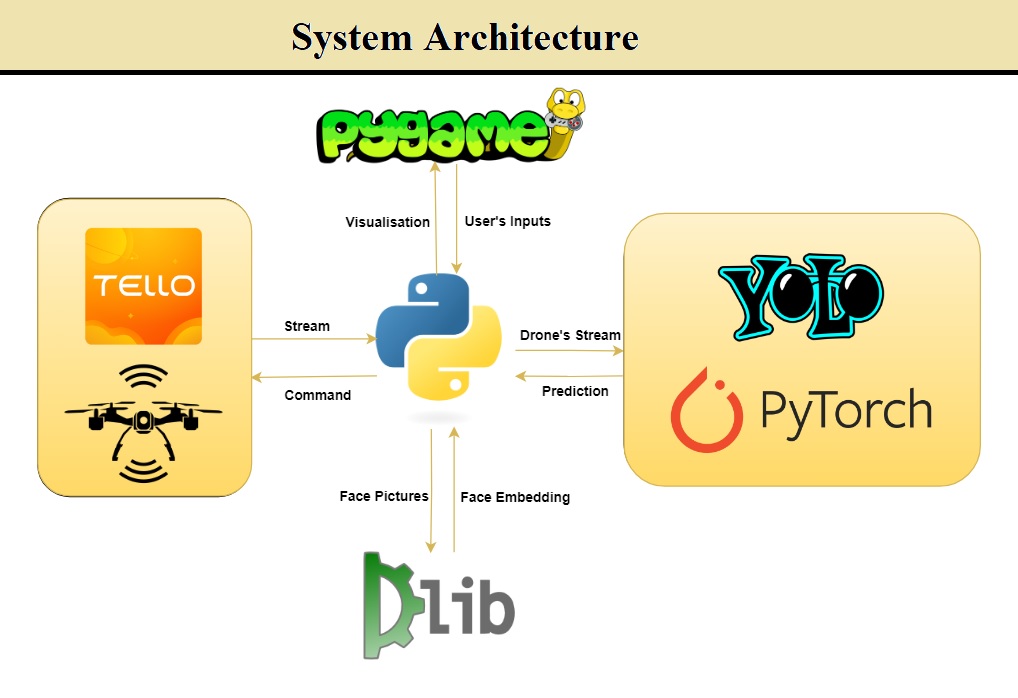

Frameworks:

YOLOv9, Faster-RCNN, Face Recognition, PyTorch, MediaPipe

-

Skills:

Computer Vision, Data Science, Machine Learning

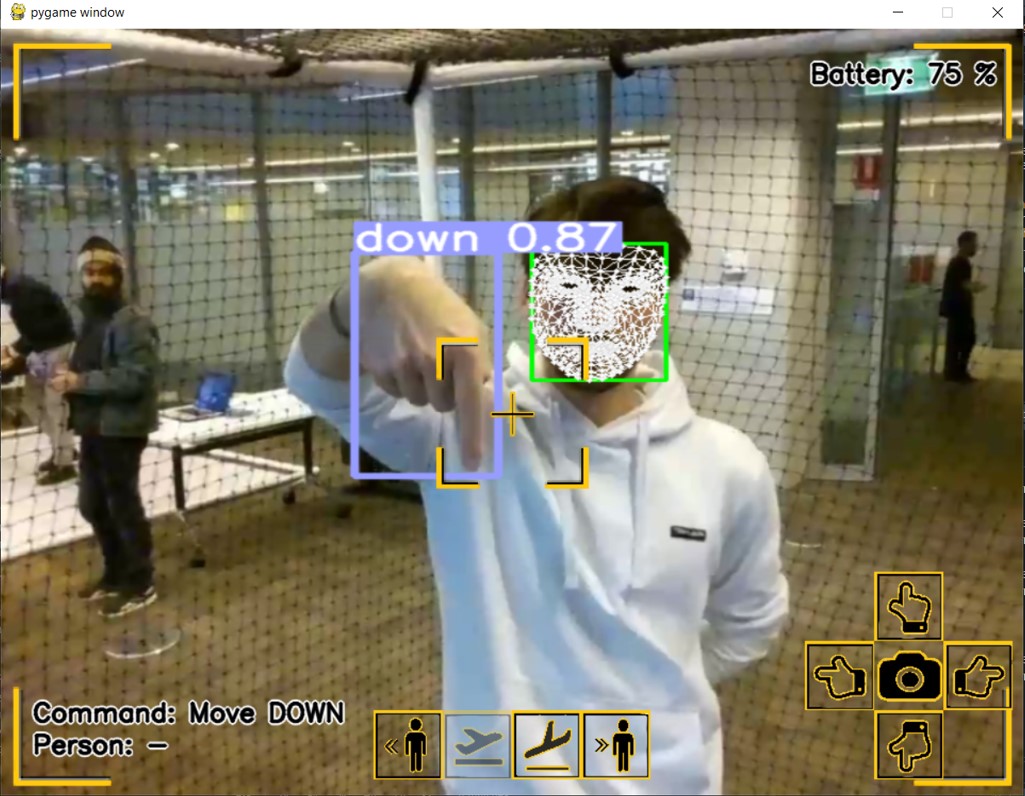

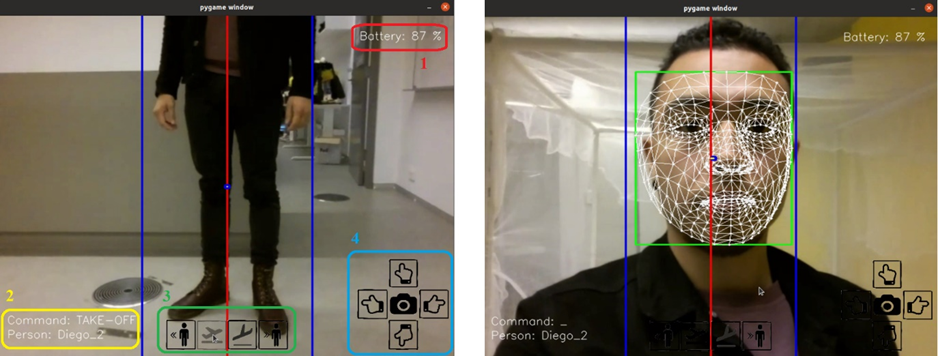

Hand Sign Gesture Drone Steering

I developed an innovative drone control system using advanced hand sign gesture and face recognition technologies for intuitive steering. Leveraging YOLOv9, I trained a model to distinguish nine specific hand gestures with 97.7% accuracy. I implemented face recognition with a robust Python library for real-time pilot authentication and a face tracking algorithm to keep the user's face centered by adjusting the drone's perspective. The project involved extensive data collection and annotation of 10,000 images for training, testing, and validation.